This site shows a selection of projects I was working on during my studies at the University of Magdeburg. Many of them are class projects from my undergraduate/grad student time, while others originate from the work as research assistant in the various departments. The list has been loosely divided into undergrad/Master projects and PhD projects, see below. Some of the projects were developed and implemented in groups, or with the help of others. The names of the people involved, as well as project related publications are listed. If you have any questions or comments, please feel free to contact me. Accompanying publications can be found here.

Undergrad and Diplom/Master Projects

|

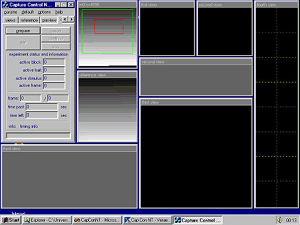

Software Internship (Niklas Röber, Michael Schild)For this undergraduate project, we developed a capturing and image analysis tool for neurological sciences. It allows to visually capture and measure the brain functions of gerbils that were exposed to acoustic stimuli. Therefore, the program enables on to setup an experiment of acoustic stimuli, to carry out the experiment, and later to analyze and visualize the resulting data. The program drives a special parallel-port hardware device and the camera system. The implementation was done using C++. |

|

fig.1 Screenshot of the CapConNT system |

|

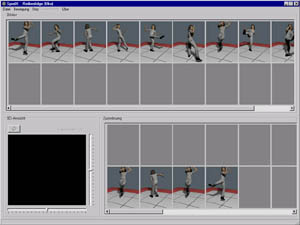

Sports Science (Niklas Röber, Dennis Kurz) Another project was devoted to explore the possibilities for cognitive learning in sports science. The goal was to develop a program, which can assist athletes in correctly learning and training sport techniques. The program was initially created for Judo, but can easily be adopted to other sports. The user simply has to create the correct movements out of pre-rendered images, animations and 3D visualizations. The movement data was captured using an optical motion capturing system and transferred to an artificial character in 3D Studio Max 2.5. In order to make use of the animation data, an exporter Plug-in had to be developed for 3D Studio Max. The progress of the learning process is displayed intuitively using graphics and also interfaced with an EEG system to measure the advances more precisely. The software itself is developed using Delphi and used since its development with great success. |

|

fig.2 Screenshot of the cognitive learning system |

|

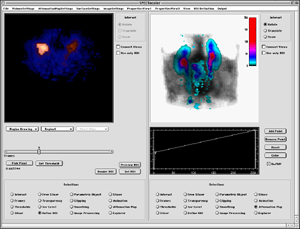

Internship MIRG (Niklas Röber) During my 7 month internship with the Medical Imaging Research Group at the Vancouver General Hospital in 1999/2000, I developed an analysis and visualization environment for dynamic SPECT data sets. This software allows to render the data in many variations, including iso-surfaces, traditional slices and volume rendering. The user can freely interact and slice the data set, as well as perform basic image and volume processing tasks to enhance the data, or to visualize certain parts in detail. Several Volumes-of-Interest can be segmented and analyzed semi-automatically. The results are displayed using time-activity-curves. Also animations can be created, showing either the entire data set or changes of specified parameters. Additional volume data, such as displaying morphological information, can also be combined with the functional dSPECT data set. The software was developed on a Macintosh using IDL 5.0. See the report here. |

|

fig.3 Screenshot of the dSPECT analysis and visualization system |

|

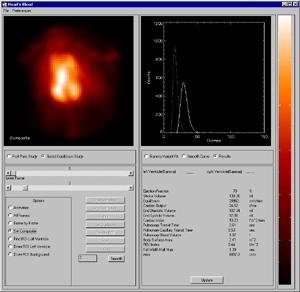

Cardiac Analysis Software (Niklas Röber) This program was developed

in cooperation

with Gerald S. Zavorsky and Dr. Sexsmith from St. Paul's Hospital and

the University of British Columbia in Vancouver, Canada. Gerry was

working on his Ph.D. about: "Acute

hypervolemia lengthens pulmonary

transit time during near-maximal exercise in endurance athletes with

exercise-induced arterial hypoxemia".

|

|

fig.4 Screenshot from the Cardiac analysis system |

|

Multimedia CD's and short Films (Niklas Röber, Martin Spindler, Cosme Lam, Alf Zander, Jens Hasenstein ...) As part of some University projects and classes, several Multimedia CD's (3) and short films (4) were created. The majority of these projects was related to topics from education, philosophy and human interaction. Additionally, an extensive advertisement campaign for the fake product "Bauschaum2000" (insulation foam), including 2 video ads, a large poster and a website were designed and created. The Multimedia CD's were designed and implemented using standard CD authoring software, including Macromedia Director and RSE Author. The films were filmed and arranged digitally, using Adobe Premiere and After Effects, as well as discreets combustion* for post-processing. |

|

fig.5 Screenshot from the film "aspiration" |

|

Virtual Reconstructions (Niklas Röber, Maic Masuch, ...) As part of my research assistantship at the Department for Simulation and Graphics from 1998-2001, I was responsible for the modeling, texturing and animation of an excavated scene and the resulting virtual reconstruction of an ancient building. In the 1960's archaeologists discovered remains from a building, which was long believed to be the palace of Otto-the-Great, one of Europe's biggest emperors in the middle ages. Several reconstructions were created and in 2001 exposed to the public as part of an exhibition in the local museum. The exhibition got the title of an "Exhibition of the European Council", as well as the patronage of the German Federal President, Johannes Rau. In early 2000, researchers discovered that the believed palace was a cathedral and, as a result to integrate some uncertainty into the renditions, NPR techniques were used in the visualizations to show the varying knowledge of the absolute shape of the building. See the accompanying publication here. |

|

fig.6 A photo-realistic rendition from the Kasierpfalz project |

|

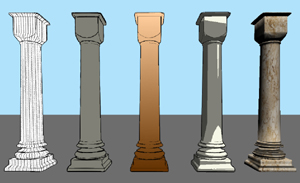

NPR Rendering using OpenGL (Niklas Röber) As a coop student in the last semester, I implemented several NPR techniques into the freely available OpenGL-based 3D game engine Fly3D. These NPR techniques could also be used to visualize uncertainty for our virtual reconstructions (see previous project). All rendering styles can be combined in the same scene and are rendered in real-time. The techniques implemented include classic cartoon rendering, z-coloring, line-dithering, warm/cold rendering, as well as tonal art maps. See the report here. |

|

fig.7 Five different rendering styles in a game engine |

|

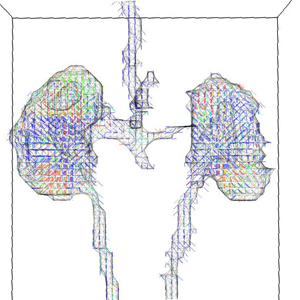

dSPECT Vector Visualization (Niklas Röber) This project focused on the generation of parametric displays that show the direction and strength of flow in a human kidney. Here flow information from dSPECT data sets were extracted and interpolated and visualized using 3D glyphs and hefgehogs. The vectors are computed by using a flow-simulation model. Every voxel is assigned to a specific volume-of-interest. Using this information and the given time-activity-curve for this voxel one can determine average flow vectors, which are fitted to the data set in a second iteration step. For some tissues, like the ureter, the flow is periodic, because the urine is collected in the kidney basin for a while and then released. For this tissue the flow direction is nearly constant, but the speed changes. The real flow is computed by using the initial average flow from each voxel, the flow direction of neighboring voxels and also from the rate of change, acceleration and deceleration, of the activity. The resulting vectors represent the flow of the activity and visualize the organs function. See the accompanying publication here. |

|

fig.8 Hedgehog visualization showing the flow of activity through a human kidney |

|

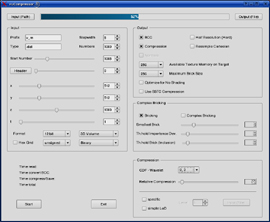

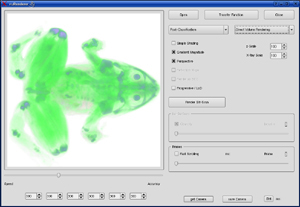

Diplom/Master's Thesis (Niklas Röber) In my Master's Thesis I was working on visualization techniques for large volumetric data sets. This research was conducted in the Graphics Lab at the Simon-Fraser-University in Vancouver, Canada. I used programmable graphics hardware to compute the final visualizations. Using the latest developments, high quality graphics could be achieved at interactive frame rates. In a pre-processing step, the data was loaded and eventually resampled to a more efficient lattice (BCC, D4*). Furthermore, larger data sets were decomposed using wavelets for LOD rendering and also broken up into bricks, if the entire data set would not fit in the targeted graphics memory size. After this processing, the data can be loaded into the visualization system, where the usual volume rendering techniques, such as direct volume rendering, iso-surfaces with and without shading, x-ray rendering and maximum intensity projections are available. Also animations of time-varying data sets can be created. The rendering itself was implemented using graphics hardware, eg. 3D textures and register combiners. Several studies were preformed to prove the efficiency of the system. Additionally, and because of a later shifted focus of the thesis, multi-parameter visualization techniques of fuel cell simulations were analyzed and prototypically implemented using the VTK toolkit for visualization and rendering. See the report here. |

|

fig.9/10 Screenshots from the volume rendering system displaying the frog CT dataset |

PhD Research

|

During

my time as PhD student I was involved into and also managed and

administered several projects. The majority of them were of

course

related to my main research topic, but also other and

neighboring

areas were covered. Most of the here presented projects represent

teamwork and were developed and implemented with colleagues, and

undergrad/grad students as part of their studies and supervised

internships. All names are listed and the projects accompanying

publications are listed on the publications

page.

|

|

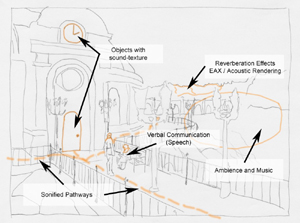

Auditory Environments (Niklas Röber, Daniel Walz, Andre Miede, Jörg Futterlieb)Auditory environments can be seen as the acoustic analog to the more general audio/visual environments. The goal here was to determine and evaluate suitable sonification and interaction techniques to acoustically convey information and to intuitively interact with 3D auditory spaces. Although the areas of application were not limited, a slight focus lay on entertainment and edutainment fields. An extensive analysis of the existing techniques, followed by a prototypic implementation for further testing and evaluation, resulted in the design of a new audio framework on the basis of OpenSG and OpenAL. This framework was initially only equipped with several basic sonification and interaction techniques, which grew and evolved over time with following projects. An authoring of the 3D auditory scenes could be performed using 3D Studio MAX for 3D scene authoring and VRML for setting up the auditory properties. |

|

fig.11 Visualization of a 3D auditory environment |

|

Audiogames (Niklas Röber, Mathias Otto, Cornelius Huber) By

using the audio framework from the last project, we designed and

developed 4 simple audio-only computer games. Here we additionally

employed a head tracking device (Polhemus Fastrak), a webcam and a

regular gamepad for input and user interaction. Three of the games were

action games, and a fourth one an auditory adventure playing in the

cathedral of Magdeburg:

The games were tested by sighted and also by visually impaired people. Although, the games are fun to play, one needs to hear-in for a while, as especially sighted users are not used to rely on hearing as much. Difficulties also occurred with the 3D audio adventure, as the orientation and navigation within 3D auditory spaces turned out to be very challenging. |

|

fig.12

Screenshot

from ... |

|

Comic and Cartoon Rendering (Niklas Röber, Robert Döhring, Martin Spindler) Comic and cartoon rendering is especially suited for the graphic display in computer games, as it communicates a different reality, in which different possibilities and characteristics exist - different to our own. Unfortunately, the cartoon rendering style used in games limits itself to only a very basic implementation. This project focused on an extension of the existing cartoon rendering through inspirations from the SinCity and Spawn comic books. Several techniques could be adapted and integrated into a 3D game engine, such as double contour lines, inverse shading, hatching, stylistic shadows, pseudo edges through soft cell shading, and of course the classic cell shading approach. Furthermore, a simple analysis of camera styles and movements were performed to also integrate these characteristics within a game engine and computer games. |

|

fig.13 Screenshots showing a SinCity inspired Cartoon rendering style |

|

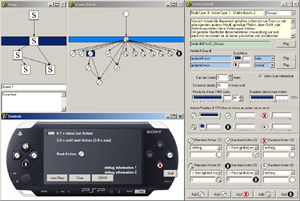

Interactive Audiobooks (Niklas Röber, Cornelius Huber) Interactive Audiobooks aim to combine the potentials of complex (non-)linear narratives (eg. books and radio plays) with interactive elements from computer games. The design thereby concentrates on a flexible degree of interaction in a way that the listener's experience ranges anywhere from a passive listening to an interactive audio-only computer game. The story branches at important points, at which the listener can decide which direction to pursue. Several smaller minigames are scattered throughout the story and the listener/player can take an active part in the story. These interactions are, however, not required, and the Interactive Audiobooks can also be listened to just as regular audiobook. |

|

fig.14 Screenshot of the Interactive Audiobooks authoring environment |

|

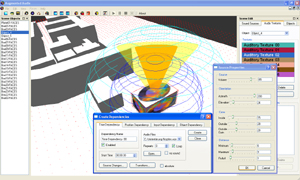

Augmented Audio Reality (Niklas Röber, Eva Deutschmann, Mathias Otto) Augmented audio reality combines a real world environment with an artificial auditory environment. This artificial auditory space can contain additional information, and be used for entertainment, eg. in games, to guide visually impaired people, or also as tourist information system. Within this project, a low-cost augmented audio system on a PC basis was designed and developed, along an authoring environment and several smaller applications to demonstrate its function and applicability. Here a self developed WLAN-based user tracking system is employed, which allows a tracking precision of 2-3 meters within building. Additionally, a digital compass and a gyro mouse is employed to facilitate user interaction. Two examples scenarios have been implemented, but need to be tested more extensively. One is an adaptation of "The hidden Secret", which has been used in an audio adventure game, but also as story for an Interactive Audiobook. |

|

fig.15 Screenshot from the authoring environment for augmented audio reality |

|

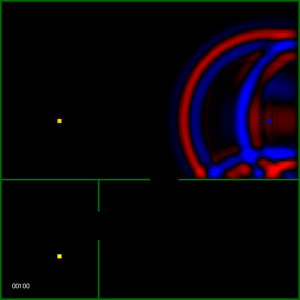

Wave-based Sound Simulations (Niklas Röber, Martin Spindler) Wave-based room acoustics is concerned with the numerically evaluation of the wave equation in order to simulate sound wave propagation. The digital waveguide mesh is an extension of the original 1D technique and constructed by bi-linear delay lines that are arranged in a mesh-like structure. Higher dimensions are built by scattering junctions that are connected to the delay lines and act as spatial and temporal sampling points. The equations that govern the rectilinear waveguide mesh are based on difference equations derived from the Helmholtz equation by discretizing time and space. Due to a required oversampling and the short wavelength of the higher frequencies, such meshes can easily become quite large. Additionally, in order to perform a realtime simulation, an update rate is required similar to the targeted sampling frequency. This project evaluated the possibilities to exploit graphics hardware for wave-based sound simulations, by solving the difference equations using 3D textures and fragment shaders. The results were quite impressive and showed a possible acceleration by a factor of up to 20-30. |

|

fig.16 Sound simulations based on waveguide meshes |

|

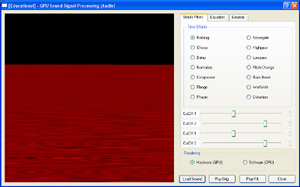

GPU-based Sound Rendering and Filtering (Niklas Röber, Ulrich Kaminski) Modern graphics hardware, especially with the new unified shader architecture, is very well suited for the manipulation and filtering of digital signals. Using a stream processing approach, up to 128 signals can be filtered, analyzed and manipulated in parallel. The hardware's architecture hereby also allows a fast and efficient storage and access of data in graphics memory, which is of equal importance. This project evaluated the possibilities for a graphics-based sound signal processing, also in close connection with the following project. Here, several simple time-based and frequency-based filters were implemented employing the GPU as a general DSP. The implemented filtering techniques include a 10-band equalizer, chorus, reverb, pitch change and many more, but also FIR convolutions, as used in room acoustics and for 3D sound spatialization. |

|

fig.17 GPU-based sound signal processing |

|

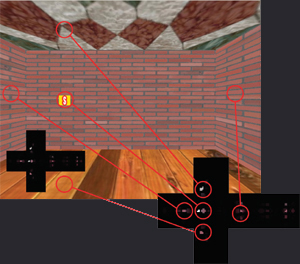

Sound Rendering and Rayacoustics (Niklas Röber, Ulrich Kaminski) Another commonly employed approach for the simulation of room acoustics is to approximate sound waves through particles that are moving along directional rays. These rays are traced through a virtual scene starting at the listener's position, at which later the accumulated energy is evaluated and used to determine the virtual soundfield. As sound waves are now simplified as rays, wave phenomena and differences in wavelength are generally discarded and ignored. This project analyzes sound propagation in terms of acoustic energy and explores the possibilities to map these concepts to radiometry and graphics rendering equations. Although, the main focus is on ray-based techniques, also wave-based sound propagation effects are partially considered. The implemented system exploits modern graphics hardware and rendering techniques and is able to efficiently simulate 3D room acoustics, as well as to measure simplified personal HRTFs through acoustic raytracing. This project also uses the GPU as sound DSP to create a binaural signal with the virtual rooms acoustic imprint. |

|

fig.18 Sound simulations based on acoustic raytracing |

|

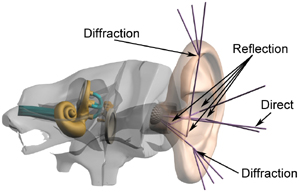

HRTF Simulations (Niklas Röber, Ulrich Kaminski, Sven Andres) Accurate and precise HRTFs are very important for 3D sound spatialization, as they enable the listener to determine a sounds origin in 3D space. Unfortunately, these HRTFs vary from person to person with the shape of the head and the outer ears. So far, no techniques are available that allow a simple and intuitive personalization or user accommodation of existing HRTFs. One promising approach is here to simulate these HRTFs using geometric models. Although, this requires a second technique to acquire a geometric model of the user's ear, it would also allow to perform a simulation to measure HRIRs/HRTFs. These can later be used for a real personalized 3D sound spatialization. Here we compared two different approaches; an offline ray-acoustic simulation using the POV-Ray rendering system, and a more real-time implementation using programmable graphics hardware. |

|

fig.19 Principle of ray-based HRIR simulations |

|

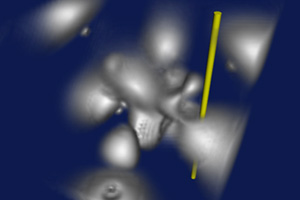

2D/3D Data/Volume Sonification and Interaction Techniques (Niklas Röber, Lars Stockmann, Axel Berndt) The

majority of data and information in today's world is represented

visually using color and graphical primitives. This not only overloads

the visual channel, but also ignores that some data is best represented

using auditory cues and certain sonification techniques. The goal of

this project was to explore the possibilities, but also the

limitations, of data and volume sonification techniques. Several

prototypes were developed and compared for the tasks of stock data

sonification, the sonification of 2D shapes and 3D objects, as well as

for an acoustic representation of 3D volumetric data sets. A parallel

sonification of multiple stack data sets was thereby implemented using

spatialization and a rhythmic sequencing, while the sonification of 2D

shapes and 3D objects/volumes was based on an auditory scanline,

respective a 3D auditory chimes. A additional user evaluation approved

the developed techniques and has shown that a sonification of 2D and 3D

data sets is possible even by untrained ears.

|

|

fig.20 Sonification of a volumetric data set using an auditory chimes |

MBA Research

|

During

my first years in Hamburg I started a distant learning University program at the Fernuniversität Hagen / University of Wales.

The co-organized MBA program focussed on the subjects of finance &

controlling, with courses and workshops at the Fernuni Hagen, and an

MBA thesis at the University of Wales.

|

|

Simulation-based Business Valuation (Niklas Röber) Today, the

process of company valuation is a daily business that is employed every

day many times for various reasons. A variety of different methods and

techniques have been developed and evolved over the years to perform

business valuation. Each method has its own advantages and drawbacks.

However, all methods share that they are based on uncertain data, which

is used to perform the business valuation in order to determine the

most realistic value for a specific company.

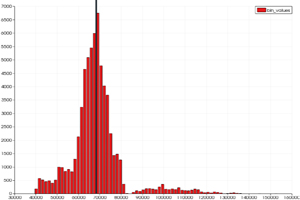

The goal of this Masters thesis is the evaluation of classic valuation techniques, and their extension towards a simulation-based business valuation by employing stochastic optimization processes. Two simulation techniques are used, the common Monte-Carlo simulation, as well as a particle swarm optimization method. Both simulations are extended to perform business valuation using the discounted cash flow method. The thesis is available in German at this page. |

|

fig.21 Business valuation of Google Inc. using a |